Project Alumni: Shawna Thomas, Chinwe Ekenna

Supported By: NSF, Schlumberger Faculty for the Future Fellowship

|

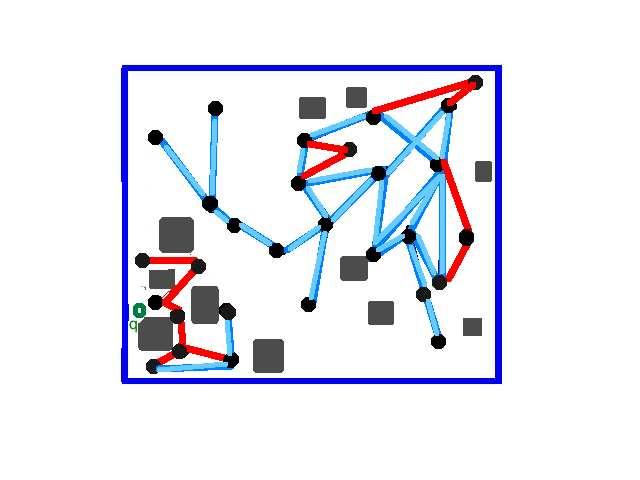

We present an algorithm that considers the performance history in the vicinity of the current connection attempt and uses this information to select good candidates for connection. It thus removes any need to explicitly partition the environment which is burdensome and typically difficult to do. Experimental results show that the method learns and adapts in heterogeneous environments, and finds solution paths faster for single and multi-query scenarios and builds roadmaps with better coverage and connectivity.

Reinforcement Learning Example |

In this work we use machine learning techniques to intelligently combine and use any existing PRM method. This affords us the opportunity to use all available PRM method irrespective of the problem scenario. We show in our experiments that we are able to remove the overhead of deciding what PRM method to use for a given input problem. We test on a variety of heterogeneous environment including protein folding simulations and we utilize the strengths (ability to generate samples in narrow passages) of these methods when needed while minimizing their weaknesses (time needed to solve a query).

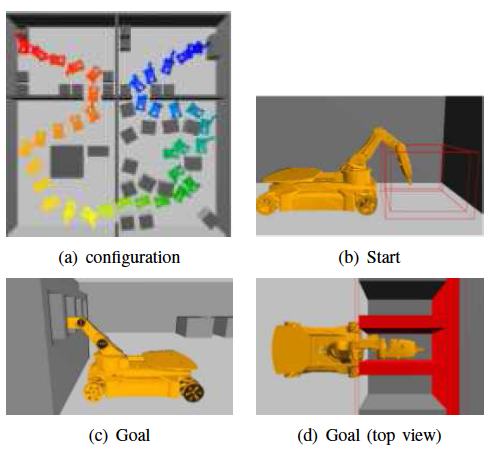

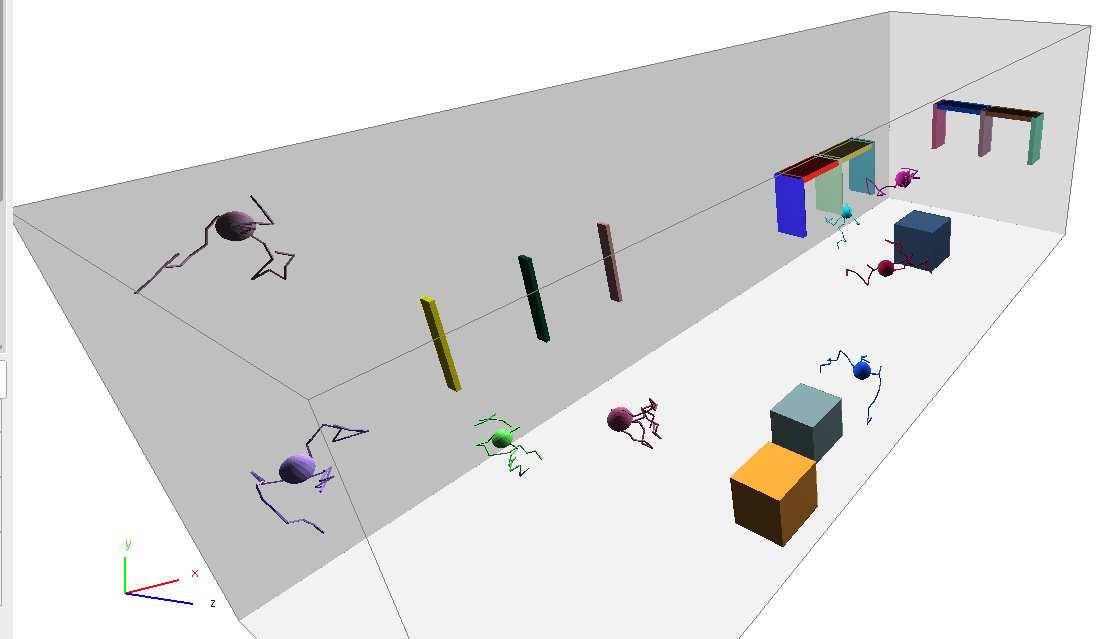

Heterogenous Environment Example |

Probabilistic Roadmap Methods (PRMs) are widely used motion planning methods that sample robot configurations (nodes) and connect them to form a graph (roadmap) containing feasible trajectories. Many PRM variants propose different strategies for each of the steps and choosing among them is problem dependent. Planning in heterogeneous environments and/or on parallel machines necessitates dividing the problem into regions where these choices have to be made for each one. Hand-selecting the best method for each region becomes infeasible. In particular, there are many ways to select connection candidates, and choosing the appropriate strategy is input dependent.

In this work, we present a general connection framework that adaptively selects a neighbor finding strategy from a candidate set of options. Our framework learns which strategy to use by examining their success rates and costs. It frees the user of the burden of selecting the best strategy and allows the selection to change over time.

We perform experiments on rigid bodies of varying geometry and articulated linkages up to 37 degrees of freedom. Our results show that strategy performance is indeed problem/region dependent, and our adaptive method harnesses their strengths. Over all problems studied, our method differs the least from manual selection of the best method, and if one were to manually select a single method across all problems, the performance can be quite poor. Our method is able to adapt to changing sampling density and learns different strategies for each region when the problem is partitioned for parallelism.

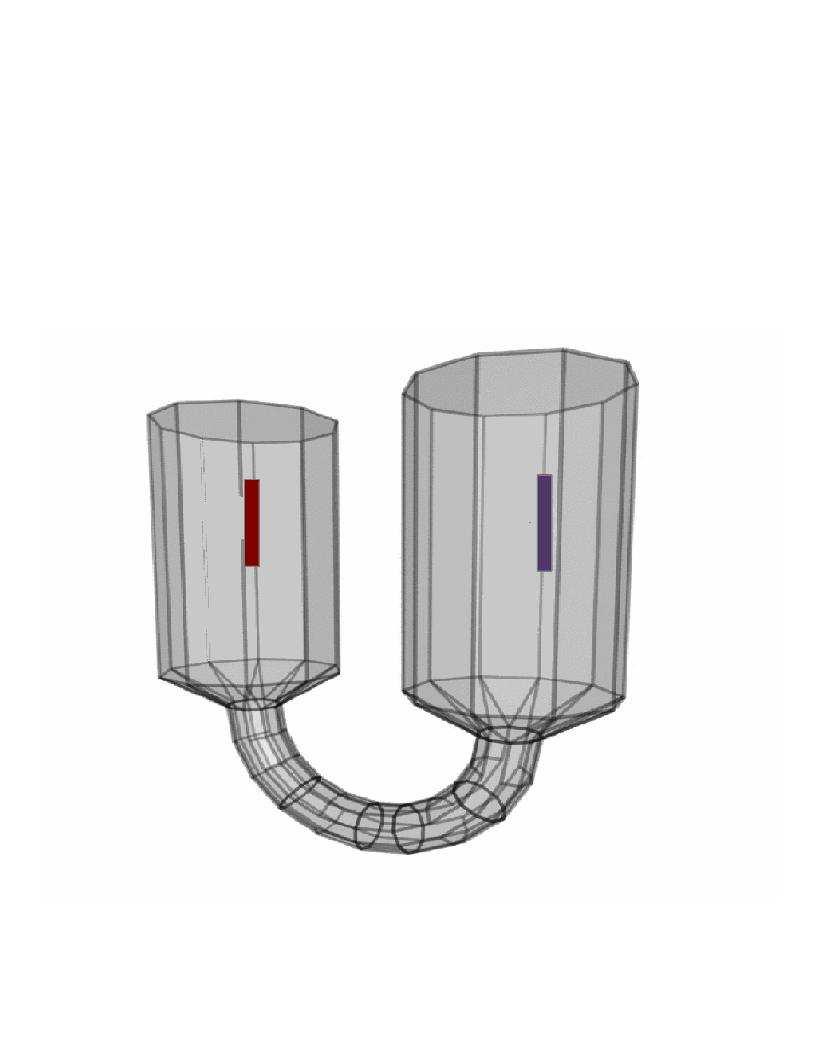

U-Tunnel Environment |

Maze Heterogenous Environment |

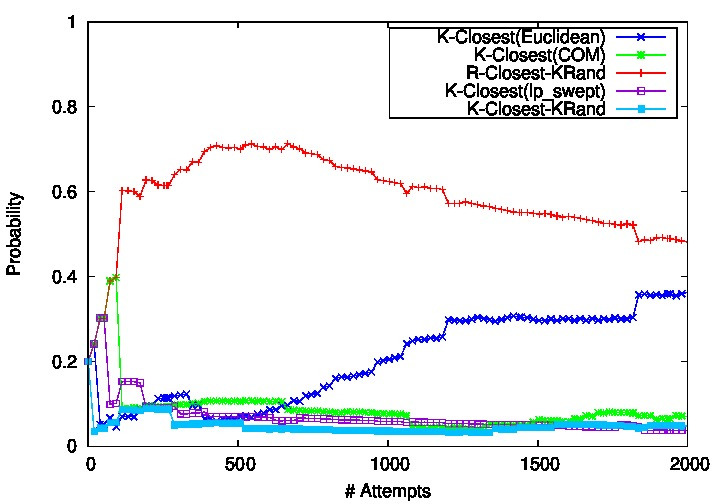

The U-tunnel environment has a long rigid body robot which behaves much differently than the compact robots in the prior environments. Here, ANC performs significantly better than the other methods in terms of running time and roadmap size and near the best in terms of Edges/Nodes ratio. Figure 3 provides the learning plot. Again, ANC is able to adapt and learn a good connection strategy.

Learning Profile for a U-tunnel Environment |

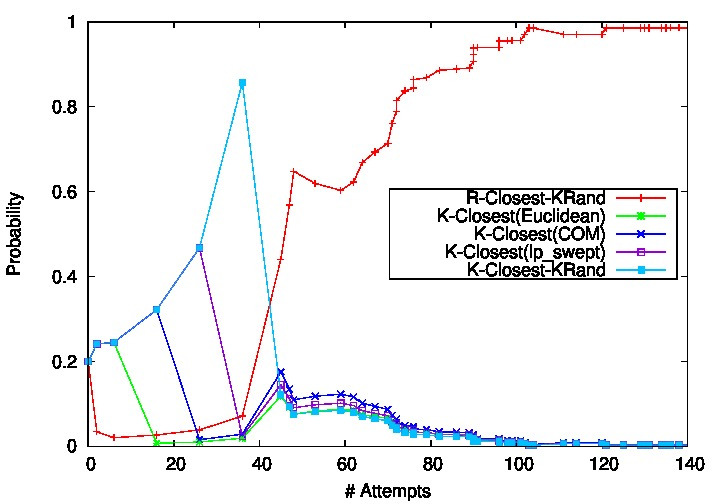

The maze environment result plots shows the probability that each connection method is selected in the ANC framework over time for a single representative run. Figure 2 shows that early on R-Closest,K-Rand(Euclidean) is selected. However, as roadmap construction progresses, the success of this method begins to level out and the probability of K-Closest,R-Rand(Euclidean) begins to increase and then levels off.

Learning Profile for a maze Environment |

Probabilistic Roadmap Methods (PRMs) solve the motion planing problem by constructing a roadmap (or graph) that models the motion space when feasible local motions exist. PRMs and variants contain several phases during roadmap generation i.e., sampling, connection, and query. Some work has been done to apply machine learning to the connection phase to decide which variant to employ, but it uses a global learning approach that is inefficient in heterogeneous situations.

We present an algorithm that instead uses local learning: it only considers the performance history in the vicinity of the current connection attempt and uses this information to select good candidates for connection. It thus removes any need to explicitly partition the environment which is burdensome and typically difficult to do. Our results show that our method learns and adapts in heterogeneous environments, including a KUKA youBot with a fixed and mobile base. It finds solution paths faster for single and multi-query scenarios and builds roadmaps with better coverage and connectivity given a fixed amount of time in a wide variety of input problems. In all cases, our method outperforms the previous adaptive connection method and is comparable or better than the best individual method.

Local learning region |

Local learning scenario |

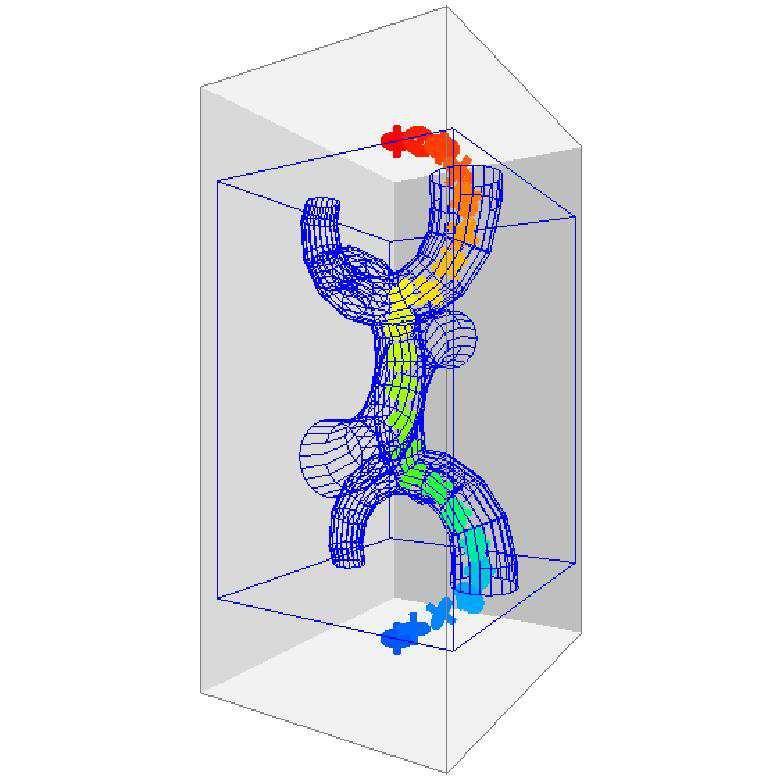

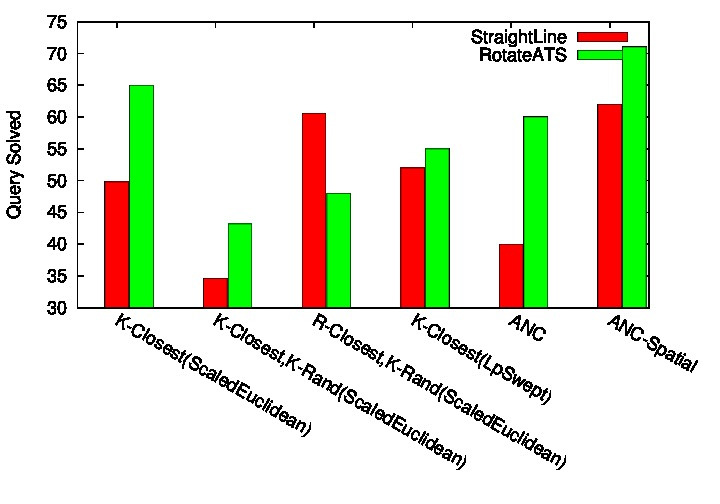

The 36 DOF JellyFish environment consist of a robot has three articulated arms attached to a sphere where each arm has 10 links. The aim of this experiment is to see the performance of ANC-Spatial on a highly articulated and complex robot. Different types of obstacles are placed throughout the envi- ronment. We again provide 8 query samples and count how many possible queries can be solved. Roadmap construction is allowed to take 1500 seconds. Figure 6 (bars in red) shows the percentage of queries (6(a)) and the percentage of nodes in the largest connected components (6(b)) using the StraightLine local planner for each method. ANC-Spatial again solves the greatest percent- age of queries with a higher percentage of its nodes in the largest connected component. ANC-Spatial also outperforms ANC in this articulated linkage environment. This environ- ment is prone to bad partitioning choices because a bad partitioning could easily form a narrow passage that cannot be traversed by this highly articulated linkage. Thus it is even more imperative that localized learning regions are dynamic. Figure 6 (bars in green) shows results using the RotateATS local planner. ANC-Spatial also outperforms the other methods in terms of percentage of queries solved. We also have a good percentage of nodes in the largest connected com- ponent. Even a large change in local planners (as evidenced by changes in individual connection method performance) results in small change in ANC-Spatial performance.

36 DOF JellyFish Environment |

36 DOF JellyFish Time Results |

36 DOF JellyFish Node Results> |

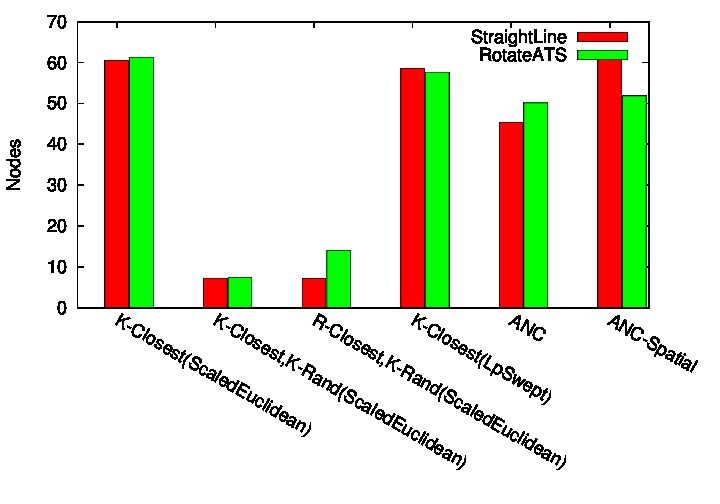

KukayouBot Environment |

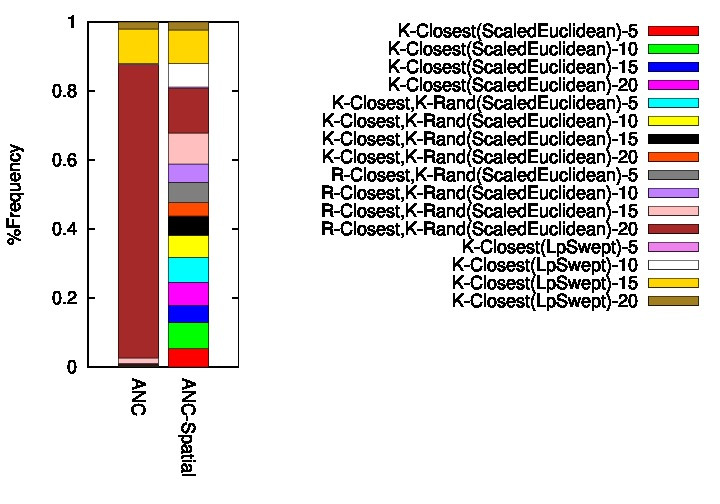

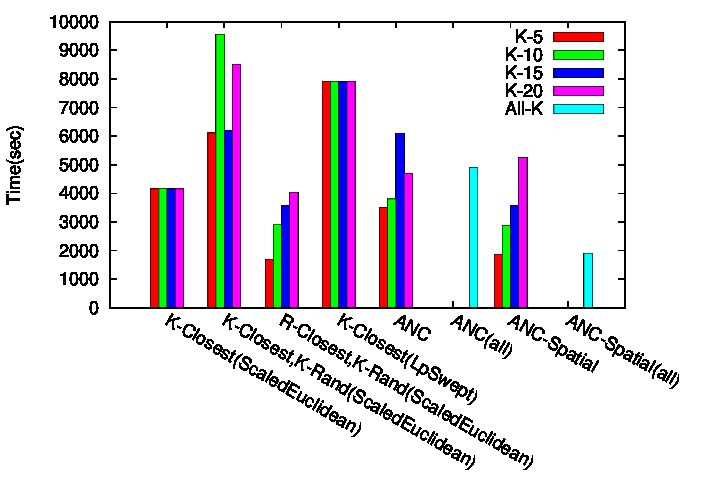

KukayouBot Frequency of Usage |

KukayouBot Time Results |

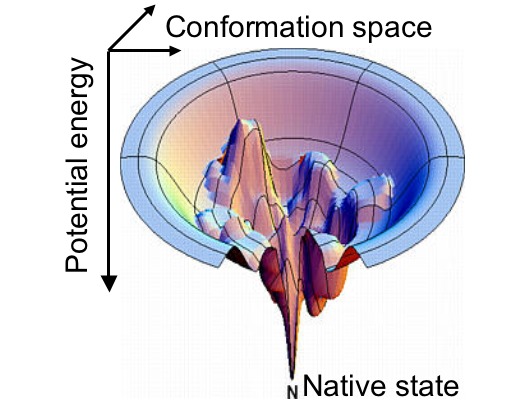

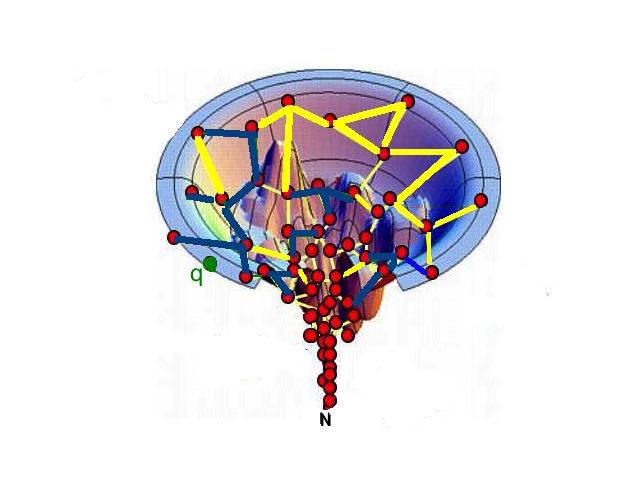

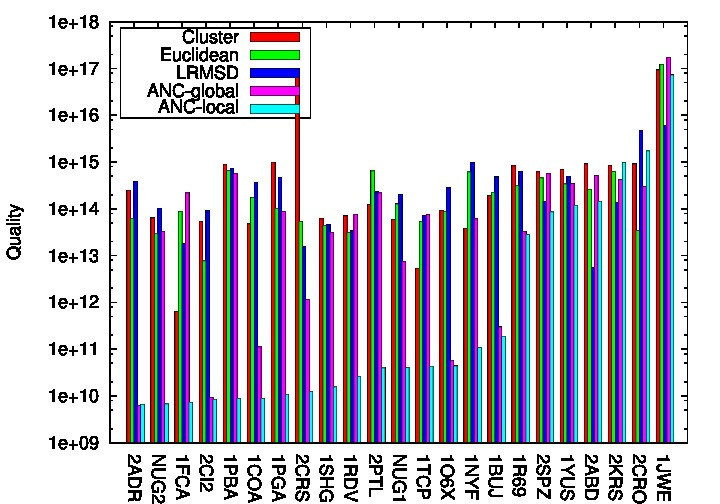

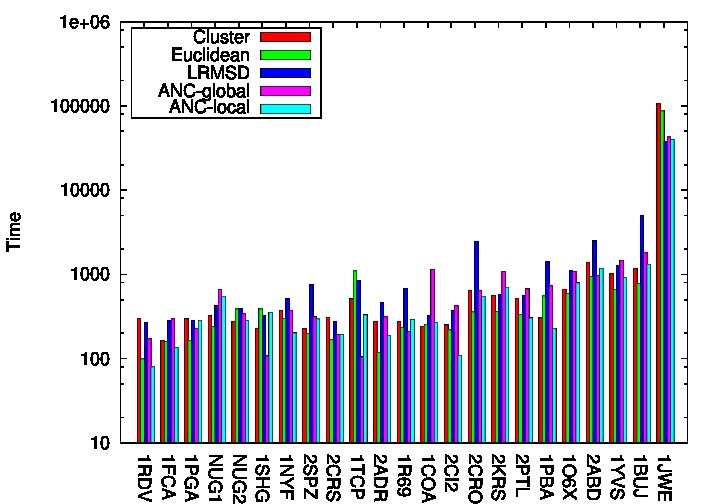

Simulating protein folding motions is an important problem in computational biology. Motion planning algorithms such as Probabilistic Roadmap Methods (PRMs) have been successful in modeling the protein folding landscape. PRMs and variants contain several phases (i.e., sampling, connection, and path extraction). Global machine learning has been applied to the connection phase but is inefficient in situations with varying topology, such as those typical of folding landscapes. Results: We present a local learning algorithm that considers the past performance near the current connection attempt as a basis for learning. It is sensitive not only to different types of landscapes but also to differing regions in the landscape itself, removing the need to explicitly partition the landscape. We perform experiments on 23 proteins of varying secondary structure makeup with 52–114 residues. Our method models the landscape with better quality and comparable time to the best performing individual method and to global learning.

Folding Landscape |

Connection Learning on Folding Landscape |

||

| Applying Learning to Computational Biology | |||

Quality Results |

|||

Time Results |

|||

| Applying Learning to Computational Biology | |||

Improved Sampling Based Motion Planning Through Local Learning, Chinwe Pamela Ekenna, Improved Sampling Based Motion Planning Through Local Learning, Aug 2016. @phdthesis{ekenna2016improved, Every motion made by a moving object is either planned implicitly, e.g., human natural movement from one point to another, or explicitly, e.g., pre-planned information about where a robot should move in a room to effectively avoid colliding with obstacles. Motion planning is a well-studied concept in robotics and it involves moving an object from a start to goal configuration. Motion planning arises in many application domains such as robotics, computer animation (digital actors), intelligent CAD (virtual prototyping and training) and even computational biology (protein folding and drug design). Interestingly, a single class of planners, sampling-based planners have proven effective in all these domains. Probabilistic Roadmap Methods (PRMs) are one type of sampling-based planners that sample robot configurations (nodes) and connect them via viable local paths (edges) to form a roadmap containing representative feasible trajectories. The roadmap is then queried to find solution paths between start and goal configurations. Different PRM strategies perform differently given different input parameters, e.g., workspace environments and robot definitions. Motion planning, however, is computationally hard âÃÂàit requires geometric path planning which has been shown to be PSPACE hard, complex representational issues for robots with known physical, geometric and temporal constraints, and challenging mapping/representing requirements for the workspace environment. Many important environments, e.g., houses, factories and airports, are heterogeneous, i.e., contain free, cluttered and narrow spaces. Heterogeneous environments, however, introduce a new set of problems for motion planning and PRM strategies because there is no ideal method suitable for all regions in the environment. In this work we introduce a technique that can adapt and apply PRM methods suitable for local regions in an environment. The basic strategy is to first identify a local region of the environment suitable for the current action based on identified neighbors. Next, based on past performance of methods in this region, adapt and pick a method to use at this time. This selection and adaptation is done by applying machine learning. By performing the local region creation in this dynamic fashion, we remove the need to explicitly partition the environment as was done in previous methods and which is difficult to do, slows down performance and includes the difficult process of determining what strategy to use even after making an explicit partitioning. Our method handles and removes these overheads. We show benefits of this approach in both planning robot motions and in protein folding simulations. We perform experiments on robots in simulation with different degrees of freedom and varying levels of heterogeneity in the environment and show an improvement in performance when our local learning method is applied. Protein folding simulations were performed on 23 proteins and we note an improvement in the quality of pathways produced with comparable performance in terms of time needed to build the roadmap.

Keywords: Machine Learning, Sampling-Based Motion Planning

Links : [Published] BibTex

title={Improved sampling based motion planning through local learning},

author={Ekenna, Chinwe Pamela},

year={2016}

}Abstract

Adaptive Local Learning in Sampling Based Motion Planning for Protein Folding, Chinwe Ekenna and Shawna Thomas and Nancy M. Amato, BMC Syst Biol, Special Issue from the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Vol: 10, Issue: 49, pp. 165-179, Aug 2016. DOI: 10.1186/s12918-016-0297-9 @article{ekenna-bmcsb-2016 Background

Simulating protein folding motions is an important problem in computational biology. Motion planning algorithms, such as Probabilistic Roadmap Methods, have been successful in modeling the folding landscape. Probabilistic Roadmap Methods and variants contain several phases (i.e., sampling, connection, and path extraction). Most of the time is spent in the connection phase and selecting which variant to employ is a difficult task. Global machine learning has been applied to the connection phase but is inefficient in situations with varying topology, such as those typical of folding landscapes.

Results

We develop a local learning algorithm that exploits the past performance of methods within the neighborhood of the current connection attempts as a basis for learning. It is sensitive not only to different types of landscapes but also to differing regions in the landscape itself, removing the need to explicitly partition the landscape. We perform experiments on 23 proteins of varying secondary structure makeup with 52ÃÂÃÂÃÂâÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ114 residues. We compare the success rate when using our methods and other methods. We demonstrate a clear need for learning (i.e., only learning methods were able to validate against all available experimental data) and show that local learning is superior to global learning producing, in many cases, significantly higher quality results than the other methods.

Conclusions

We present an algorithm that uses local learning to select appropriate connection methods in the context of roadmap construction for protein folding. Our method removes the burden of deciding which method to use, leverages the strengths of the individual input methods, and it is extendable to include other future connection methods.

Keywords: Computational Biology, Protein Folding

Links : [Published] BibTex

, author = "Chinwe Ekenna and Shawna Thomas and Nancy M. Amato"

, title = "Adaptive Local Learning in Sampling Based Motion Planning for Protein Folding"

, journal = "BMC Syst Biol, Special Issue from IEEE International Conference on Bioinformatics and Biomedicine (BIBM)"

, volume = "10"

, number = "49"

, pages = "165--179"

, year = "2016"

, month = "August"

, doi = "10.1186/s12918-016-0297-9"

}

Abstract

The Impact of Approximate Methods on Local Learning in Motion Planning, Diane Uwacu, Chinwe Ekenna, Shawna Thomas, Nancy Amato, 1st International Workshop on Robot Learning and Planning (RLP 2016), in conjunction with RSS 2016, pp. 38-44, Ann Arbor, MI, Jun 2016. @inproceedings{uwacu-wrlp-2016 Machine learning methods have been applied to many motion planning algorithms including probabilistic roadmap methods (PRM). There are many variants of these methods and choosing the best one every time is hard and depends on local properties of the environment. A successful learning approach has been developed to offset this issue. This learning approach was applied to PRMs to help decide intelligently what method to utilize in dynamically created local regions of the environment or task space. It used exact neighbor finding approaches and removed the need to partition environments to get improved results. In this work we make further advances by introducing approximate neighbor finder methods. It has been established that approximate neighbor finding methods are faster than exact methods, still work well in connecting nodes to edges in PRMs, and that connection is robust to noise. We study what happens when noise is introduced into learning by using approximate methods instead of already studied exact methods. We show that the impact of noise on learning depends on how much learning needs to take place given the topology of the environment. Our results demonstrate a correlation between heterogeneity and the need for learning over a local region.

Keywords: Machine Learning, Sampling-Based Motion Planning

Links : [ArXiv] [Manuscript] BibTex

, author = "Diane Uwacu and Chinwe Ekenna and Shawna Thomas and Nancy M. Amato"

, title = "The Impact of Approximate Methods on Local Learning in Motion Planning"

, booktitle = "1st International Workshop on Robot Learning and Planning (RLP 2016), in conjunction with RSS 2016"

, month = "June"

, year = "2016"

, note = "http://chitsazlab.org/robotics/rlp2016/RLP_2016_paper_11.html"

}

Abstract

Improved Roadmap Connection via Local Learning for Sampling Based Planners, Chinwe Ekenna, Diane Uwacu, Shawna Thomas, Nancy Amato, Proc. IEEE/RSJ Int. Conf. Intel. Rob. Syst. (IROS), pp. 3227-3234, Hamburg, Germany, Oct 2015. DOI: 10.1109/IROS.2015.7353825 @INPROCEEDINGS{7353825, author={C. {Ekenna} and D. {Uwacu} and S. {Thomas} and N. M. {Amato}}, booktitle={2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title={Improved roadmap connection via local learning for sampling based planners}, year={2015}, volume={}, number={}, pages={3227-3234},} Probabilistic Roadmap Methods (PRMs) solve the motion planing problem by constructing a roadmap (or graph) that models the motion space when feasible local motions exist. PRMs and variants contain several phases during roadmap generation i.e., sampling, connection, and query. Some work has been done to apply machine learning to the connection phase to decide which variant to employ, but it uses a global learning approach that is inefficient in heterogeneous situations. We present an algorithm that instead uses local learning: it only considers the performance history in the vicinity of the current connection attempt and uses this information to select good candidates for connection. It thus removes any need to explicitly partition the environment which is burdensome and typically difficult to do. Our results show that our method learns and adapts in heterogeneous environments, including a KUKA youBot with a fixed and mobile base. It finds solution paths faster for single and multi-query scenarios and builds roadmaps with better coverage and connectivity given a fixed amount of time in a wide variety of input problems. In all cases, our method outperforms the previous adaptive connection method and is comparable or better than the best individual method.

Keywords: Machine Learning, Motion Planning, Sampling-Based Motion Planning

Links : [Published] BibTex

Abstract

Studying Learning Techniques in Different Phases of PRM Construction, Chinwe Ekenna, Diane Uwacu, Shawna Thomas, Nancy Amato, In Machine Learning in Planning and Control of Robot Motion Workshop (IROS-MLPC), Hamburg, Germany, Oct 2015. DOI: 10.1109/IROS.2015.7353825 no bib for now. Probabilistic Roadmap Methods (PRMs) solve the motion planning problem in two phases by sampling free configurations and connecting them together to build a map that is used to find a valid path. Existing algorithms are highly sensitive to the topology of the problem, and their efficiency depends on applying them to a compatible problem. Reinforcement learning has been applied to motion planning and rewards the action performed by planners during either sampling or connection, but not both. Previous work computed a global reward and action scheme, which saw a setback when heterogeneous environments were concerned. Local learning (connection) was recently introduced to offset this weakness identified during global learning, and there was some improvement in planner performance. These different learning schemes (global and local) have shown strengths and weaknesses individually. In this paper, we investigate local learning for sampling. We study what type of learning to apply when, and how the two phases of PRM roadmap construction interact, which has not been investigated before. We show the performance using each scheme on a KUKAyouBot, an 8 degree of freedom robot, and analyze what happens when they are all combined during roadmap construction

Keywords: Machine Learning, Sampling-Based Motion Planning

Links : [Published] BibTex

Abstract

Adaptive Neighbor Connection using Node Characterization , Chinwe Ekenna , Shawna Thomas , Nancy M. Amato , N/A, N/A, Jan 2014. @MISC{Ekenna_adaptiveneighbor, Abstract. Sampling-based motion planning has been successful in plan-ning the motion for a wide variety of robot types. An important primitive of these methods involves connecting nodes by selecting candidate neigh-bors and checking the path between them. Recently, an approach called Adaptive Neighbor Connection (ANC) was proposed to automate neigh-bor selection using machine learning techniques. It adaptively selects a neighbor connection strategy from a candidate set of options and learns which strategy to use by examining their success rates and costs. In this work, we extend ANCÃÂâÃÂÃÂÃÂÃÂs reward function by characterizing the types of nodes added (including information about their connectivity) after each connection attempt. In doing so, we gain insight into the na-ture of these nodes and their ability to improve roadmap quality. We also refine ANCÃÂâÃÂÃÂÃÂÃÂs cost function by considering the computation time spent during each connection attempt so as to potentially learn faster and more efficient connectors. We show improved performance over selecting a sin-gle connection strategy and over ignoring node characterization during learning. We present results in a variety of 2D and 3D environments and are able to solve queries in half the time on average compared to the best single connection strategy and to the original ANC work. 1

Keywords: Machine Learning, Sampling-Based Motion Planning

Links : [Published] BibTex

author = {Chinwe Ekenna and Shawna Thomas and Nancy M. Amato},

title = {Adaptive Neighbor Connection using Node Characterization},

year = {}

}Abstract

Adaptive Neighbor Connection for PRMs: A Natural Fit for Heterogeneous Environments and Parallelism, Chinwe Ekenna, Sam Ade Jacobs, Shawna Thomas, Nancy M. Amato, In. Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, Nov 2013. DOI: 10.1109/IROS.2013.6696510 @INPROCEEDINGS{6696510, author={C. {Ekenna} and S. A. {Jacobs} and S. {Thomas} and N. M. {Amato}}, booktitle={2013 IEEE/RSJ International Conference on Intelligent Robots and Systems}, title={Adaptive neighbor connection for PRMs: A natural fit for heterogeneous environments and parallelism}, year={2013}, volume={}, number={}, pages={1249-1256}, doi={10.1109/IROS.2013.6696510}} Probabilistic Roadmap Methods (PRMs) are widely used motion planning methods that sample robot configurations (nodes) and connect them to form a graph (roadmap) containing feasible trajectories. Many PRM variants propose different strategies for each of the steps and choosing among them is problem dependent. Planning in heterogeneous environments and/or on parallel machines necessitates dividing the problem into regions where these choices have to be made for each one. Hand-selecting the best method for each region becomes infeasible. In particular, there are many ways to select connection candidates, and choosing the appropriate strategy is input dependent. In this paper, we present a general connection framework that adaptively selects a neighbor finding strategy from a candidate set of options. Our framework learns which strategy to use by examining their success rates and costs. It frees the user of the burden of selecting the best strategy and allows the selection to change over time. We perform experiments on rigid bodies of varying geometry and articulated linkages up to 37 degrees of freedom. Our results show that strategy performance is indeed problem/region dependent, and our adaptive method harnesses their strengths. Over all problems studied, our method differs the least from manual selection of the best method, and if one were to manually select a single method across all problems, the performance can be quite poor. Our method is able to adapt to changing sampling density and learns different strategies for each region when the problem is partitioned for parallelism.

Keywords: Parallel Algorithms, Parallel Planning, Sampling-Based Motion Planning

Links : [Published] BibTex

Abstract