Project Alumni: Ali Agha-mohammadi, Aditya Mahadevan, Jory Denny, Suman Chakravorty (Aerospace Engineering, TAMU)

Interns and undergrad students: Saurav Agarwal, Daniel Tomkins

Supported By: NSF

|

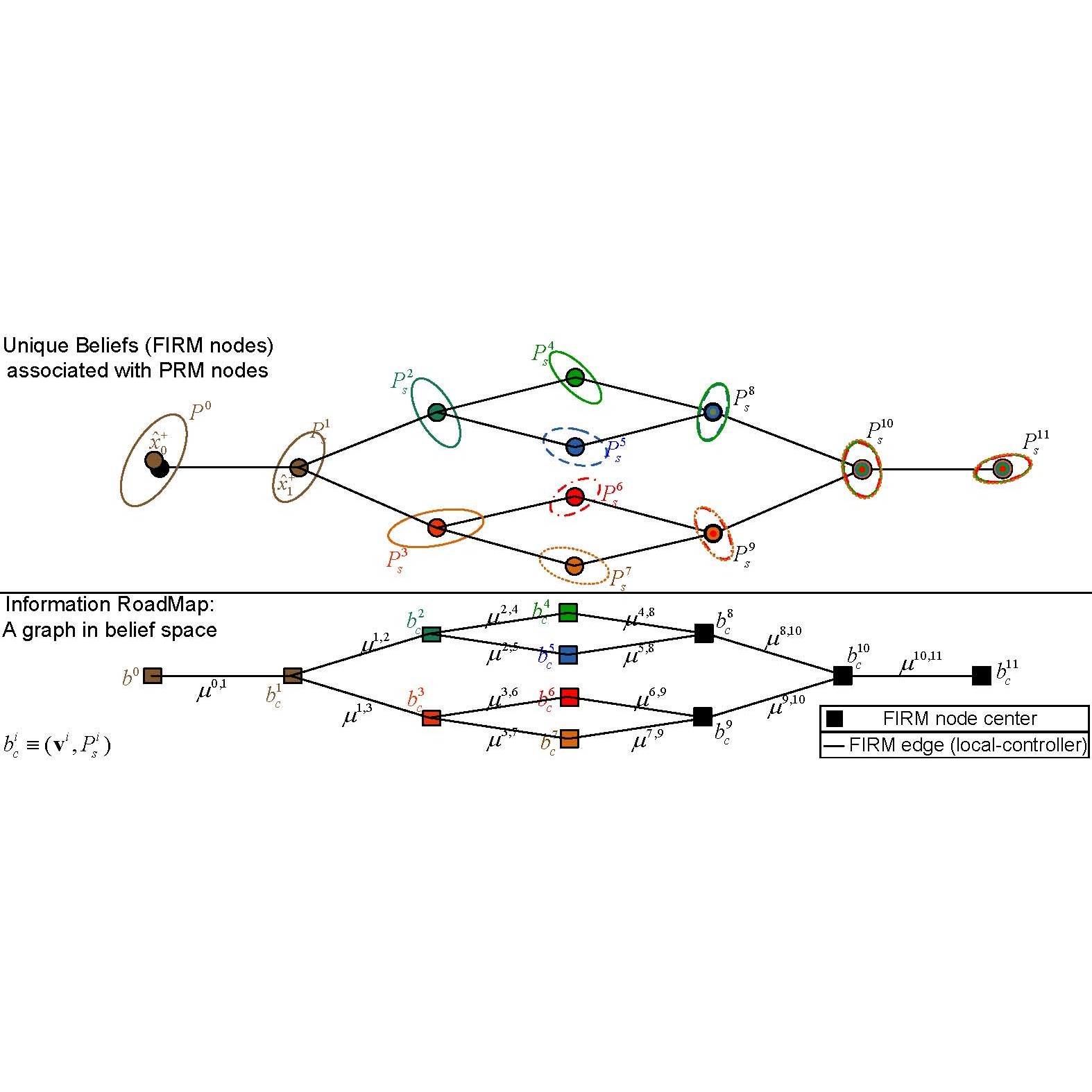

The objective of our research is to present feedback-based information roadmap (FIRM), a multi-query approach for planning under uncertainty which is a belief-space variant of probabilistic roadmap methods. The crucial feature of FIRM is that the costs associated with the edges are independent of each other, and in this sense it is the first method that generates a graph in belief space that preserves the optimal substructure property. From a practical point of view, FIRM is a robust and reliable planning framework. It is robust since the solution is a feedback and there is no need for expensive replanning. It is reliable because accurate collision probabilities can be computed along the edges. In addition, FIRM is a scalable framework, where the complexity of planning with FIRM is a constant multiplier of the complexity of planning with PRM. FIRM is developed as an abstract framework. As a concrete instantiation of FIRM, we can adopt different belief stabilizers. For example, utilising stationary linear quadratic Gaussian (SLQG) controllers as belief stabilizers, we introduce the so-called SLQG-FIRM.

Stationary Linear Quadratic Gaussian (SLQG) Feedback-based Information Roadmap is a instantiation of FIRM composed of a Kalman filter as the state estimator and a linear quadratic regulator controller. The Linear Quadratic Gaussian controller drives beliefs to a stationary point; that is, it acts as the belief stabilizer.

The properties of Linear Quadratic Gaussian controllers allow for provably being able to drive distribution around a point to the point with zero velocity. It also guarantees the existence and construction of these stationary distributions. In SLQG-FIRM, the nodes are stationary beliefs which is characterized by the mean and a positive-semidefinite matrix. Notably, the mean of the belief is the original node from the probabilistic roadmap (PRM). The edges are local controllers which drive the system from one node to another node by following the PRM edge with a LQG controller. The SLQG-FIRM, at a high-level, constructs the roadmap in the following way:

Dynamic Feedback Linearization-based (DFL) FIRM is another concrete instantiation of FIRM for nonholonomic systems which struggle to achieve belief reachabilty. DFL-FIRM uses DFL-based controllers as belief controllers which allows for the system to stabilize to belief nodes. Utilizing these DFL-based controllers, DFL-FIRM still preserves the "principle of optimality" and all other FIRM properties.

Robust Online Belief Space Planning in Changing Environments: Application to Physical Mobile Robots

This section describes how to address the physical issues that arise when trying to conduct motion planning under uncertainty with FIRM.

SLAP: Simultaneous Localization and Planning Under Uncertainty for Physical Mobile Robots via Dynamic Replanning in Belief Space